Yes I'm a few days late for David Kelly’s Learning Styles ‘Awareness’ Day, so I hope you forgive me. While most of the recent posts on using learning styles in instructional design have been mainly against using them, I'm going to

take a slightly different position—not that we need to cater to each individual style, but that learning styles may be helpful when designing learning platforms.

So far the learning style debate has been mostly two tiles of a different color laid side by side—you are either fer it or agin it—we should assess student learning styles to improve learner outcome verses learning style assessments are unreliable, thus they should not never be used. However, I see the debate more as a mosaic that allows multiple patterns to occur.

Sensing and Intuitive Learning Styles

Perhaps the most critical study on learning styles is Coffield, Moseley, Hall, and Ecclestone's Learning styles and pedagogy in post-16 learning: A systematic and critical review. While the authors mostly found that matching the form of instruction to individual learning styles did not improve learning, there are some interesting exceptions throughout their paper, for example, on page 67 they write:

“More positively still, Katz (1990) in a quasi-experimental study of 44 occupational therapy students in the US and 50 in Israel, hypothesised that students whose learning styles matched the teaching method would perform better (ie more effectively) and would need less time to study outside class (ie more efficiently). The findings in both countries supported the premise that ‘the better the match is between students' individual characteristics and instructional components, the more effective or efficient the learning program is’ (Katz 1990, 233). But even this conclusion needed to be qualified as it applied only to higher-order cognitive outcomes and not to basic knowledge.”

So in search of a good paper on using learning styles in higher order cognitive skills I came across this paper, An Investigation into the Learning Styles and Self-Regulated Learning Strategies for Computer Science Students, by Alharbi, Paul, Henskens, and Hannaford. For their study they use the Felder-Silverman Learning Style model that uses four dimensions:

- Perception (Sensing or Intuitive) describes the ways in which learners tend to perceive information. Sensing learners prefer to learn facts, are comfortable with details, and tend to solve problems using well-established methods. Intuitive learners prefer abstract concepts, theories, and mathematical formulas, and seek innovation and new ideas when solving problems.

- Input (Visual or Verbal) distinguishes between learners based on their preferred medium for the presentation of information. Visual learners prefer to learn using visual medium of presentations, such as pictures, charts, and diagrams. Verbal learners prefer spoken or written materials. Both types of learners benefit when material is delivered using a combination of visual, verbal, and written forms.

- Processing (Active or Reflective) evaluates learners based on the way they process information. Active learners prefer to learn material by using it, whereas reflective learners prefer to think about how things work before actually trying them out. Active learners are typically more comfortable working in groups than reflective learners.

- Understanding (Sequential or Global) looks at how users understand new information. Sequential learners like to follow a step-by-step linear approach that focuses on the connections between the different parts of the learning material. Global learners prefer to grasp the full picture before narrowing into the details.

The study was not about assessing the learners' styles and then catering to their preferred styles but rather assessing them on the above dimensions and then testing them on a core computer science course to see how each dimension performed. The author's correlation analysis showed that while three of the dimensions (Input, Processing, and Understanding) were not statistically significant; the Perception dimension had a significant impact on the students' results in the examination, with the t-tests confirming that sensing students were significantly outperformed by intuitive students.

The authors note that the majority of students in the study (65.8%) were sensing learners, with 39.5% having a moderate or strong preference to that learning style. However, 21.0% of students have a moderate or strong preference to intuitive learning over sensing learning. This suggests that there is a need for learning material for both types of learners, but the greater emphasis should be placed on reducing abstraction to better meet the requirements of the sensing learners, especially when it is seen that intuitive learners performed significantly better on the midterm examination.

While it was just one study, it did seem to follow the patterns of a couple of studies discussed in the Coffield et al. paper:

- Woolhouse and Bayne (2000) noted that individual differences in the use of intuition are correlated with the sensing-intuitive dimension (p50)

- Allinson and Hayes (1996) report that intuitive students performed significantly better than analytic students on the Watson-Glaser Critical Thinking Appraisal (p85)

The authors write that instructors often tend to use more intuitive type instructions (abstract concepts, theories, etc.), rather than the more sensing types of instruction (such as facts, details, and problem-solving methods). While this might at first seem laudable in that they are trying to teach the learners to operate in a more complex world that seeks innovation and new ideas when solving problem, learners often need a basic scaffold of facts and basic problem-solving methods. Yes, some of the learning platforms that we might be providing are for complex environments that do not have proven problem-solving methods, but the least we should do is provide them with some simple facts and heuristics. For example, branching scenarios are often used in elearning platforms but we expect them to jump right in and guess their way through the activity.

An example of this is that one of the myths in our profession is that ISD or ADDIE was only designed for classrooms and there is a lack of rules for when classroom training should be used (we use it more often than it is needed), but the Armed Forces came up with a simple heuristic back in the 1980s - ADDIE Does More Than Classrooms. Thus this heuristic should be given to learners studying to be instructional/learning designers BEFORE they attempt to do a branching scenario or similar activity.

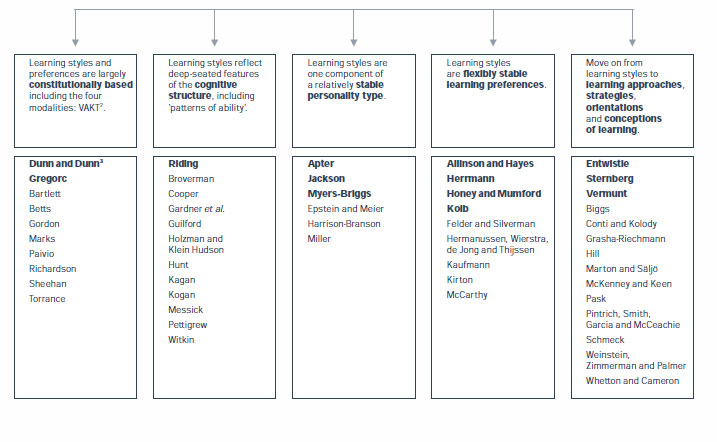

The Continuum of Learning Styles

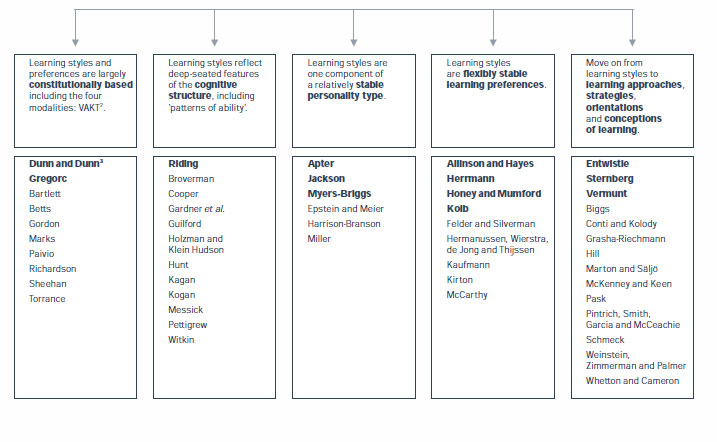

In the Coffield et al. paper they note that the various theories of learning styles can be placed on a continuum (pp 9-10) as shown in the chart below. The ones on the left are considered more constitutionally fixed styles (innate) while the ones to the right are considered more flexible:

Click to bring up a larger chart in a new window

The Sensing and Intuitive learning styles discussed above fall on the right side of the continuum, thus depending upon the learner's knowledge and skills, the subject or task, and/or the type of instruction, a learner could fall on either the sensing or intuitive side of the dimension (however, from the studies noted in this post, the majority seem to fall on the sensing side).

One of the styles that fall strongly on the left side of the continuum is VAK (Visual, Auditory, and Kinesthetic), which poses a conundrum in learning styles.

The VAK Conundrum

In an interesting study, Visual Learners Convert Words to Pictures, functional magnetic resonance imaging (fMRI) technology was used to scan subjects' brains while they performed a novel psychological task involving pictures that could be easily named and words that could be easily imagined. They found that the more strongly an individual identified with a visual cognitive style, the more they activated the visual cortex when reading words. Conversely, fMRI scans also showed that the more strongly an individual identified with a verbal cognitive style, the more activity they exhibited in a region of the brain associated with phonological cognition when faced with a picture.

Thus it seems our tendency to identify with being a visual or verbal learning is hardwired in us, however, visual preference does not always equal spatial aptitude (Ruth Clark & Chopeta Lyons, Graphics for Learning, 2004). Spatial aptitude is the ability to generate and retrain spatial images as well as transform images in ways that support visual reasoning.

Thus the conundrum—we may identify with being a visual or verbal learner (indeed, we may even be wired for one or the other), but it does not mean we are a good visual or verbal learner! Thus if we know what style our preference is, we need to think twice if we attempt to train others or learn something on our own if the learning method matches our style.

However, Clark and Lyons give us a few rules to follow:

1. Learners with low prior knowledge need graphics that are congruent with text (and preferably the text should be audio to prevent cognitive overload).

2. Learners with high prior knowledge need only words or visuals; not both, but one study did suggest that the diagram alone was best.

3. Encourage visual literacy. Some learners tend to view visuals as fluff, thus they tend to ignore them even though they might be their best means of learning. One method of encouraging their use is to use a visual and ask a question that can only be derived by examining the visual.

My Three Tiles in the Mosaic of Learning Styles

Sensing and Intuitive Learning Styles, The Continuum of Learning Styles, and The VAK Conundrum are my three tiles in the mosaic of learning styles. What are yours?