In her post, Questioning Gagnè and Bloom’s Relevance, Christy Tucker describes how we often get caught up in theories without really looking at whether the research supports those theories. In this post, I would like to point out some of the research and newer findings.

While some think the Nine Steps are iron clad rules, it has been noted at least since 1977 (Good, Brophy, p.200) that the nine steps are “general considerations to be taken into account when designing instruction. Although some steps might need to be rearranged (or might be unnecessary) for certain types of lessons, the general set of considerations provide a good checklist of key design steps.”

1. Gain attention

In the military we called this an interest device—a story or some other vehicle for capturing the learners' attention and helping them to see the importance of learning the tasks at hand. For example, when I was training loading and and unloading trailers with a forklift, I would search the OSHA reports for the latest incidence report on a forklift operator who decapitated themself by sticking their head out of the protective structure of the forklift cage in order to get a better view when entering the trailer and then getting it caught between the bars supporting the forklifts protective top and the side of the trailer (it happens more often than we care to think about). This would become the basis for a story on why they needed to pay attention as the forklift may be small, but it weighs several tons and can easily slice off a limb or another body part if not treated with proper respect.

Wick, Pollock, Jefferson, and Flanagan (2006) describe how research supports extending the interest device into the workplace in order to increase performance when the learners apply they new learnings to the job. This is accomplished by having the learners and their managers discuss what they need to learn and be able to perform when they finish the training. This preclass activity ends in a mutual contract between the learners and managers on what is expected to be achieved from the learning activities (this is also closely related to the next step).

2. Tell learners the learning objective

Marzano (1998, p.94) reported an effect size of 0.97 (which indicates that achievement can be raised by 34 percentile points) when goal specification is used. When students have some control over the learning outcomes, there is an effect size of 1.21 (39 percentile points). This is the beauty of using Wick, Pollock, Jefferson, and Flanagan's mutual contract.

Of course the problem that some trainers and instructional designers run into is telling the learners the Learning Objectives word for word, rather than breaking it down into a less formal statement.

3. Stimulate recall

This is building on prior learning and forms the basis of scaffolding by 1) building on what the learners know, 2) adding more details, hints, information, concepts, feedback, etc. 3) and then allowing the learners to perform on their own. Allan Collins John Seely Brown, and Ann Holum (1991) note that scaffolding is the support the master gives apprentices in carrying out a task. This can range from doing almost the entire task for them to giving occasional hints as to what to do next. Fading is the notion of slowly removing the support, giving the apprentice more and more responsibility.

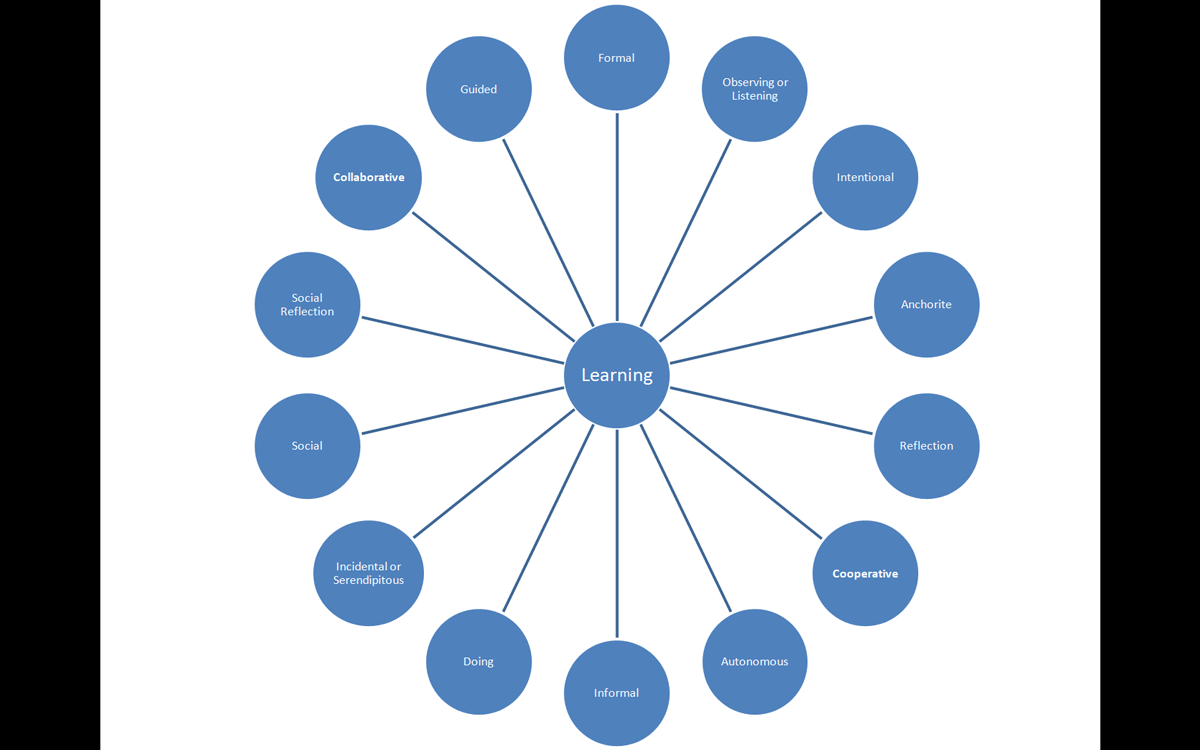

Part of stimulating recall is having the learners take notes and drawing mind maps. Learning is enhanced by encouraging the use of graphic representations when taking notes (mind or concept maps). While normal note taking has an overall effect size of .99, indicating a percentile gain of 34 points, graphic representations produced a percentile gain in achievement of 39 points (Marzano, 1998). One of the most effective of these techniques is semantic mapping (Toms-Bronosky, 1980) with an effect size of 1.48 (n=1), indicating a percentile gain of 43 points. With this technique, the learner represents the key ideas in a lesson as nodes (circles) with spokes depicting key details emanating from the node.

4. Present the stimulus, content

Implement (nuff said)

5. Provide guidance, relevance, and organization

Kind of redundant as it relates to the other steps.

6. Elicit the learning by demonstrating it (modeling and observational learning)

Albert Bandura noted that observation learning may or may not involve imitation. For example if you see someone driving in front of you hit a pothole, and then you swerve to miss it—you learned from observational learning, not imitation (if you learned from imitation then you would also hit the pothole). What you learned was the information you processed cognitively and then acted upon. Observational learning is much more complex than simple imitation. Bandura's theory is often referred to as social learning theory as it emphasizes the role of vicarious experience (observation) of people impacting people (models). Modeling has several affects on learners:

- Acquisition - New responses are learned by observing the model.

- Inhibition - A response that otherwise may be made is changed when the observer sees a model being punished.

- Disinhibition - A reduction in fear by observing a model's behavior go unpunished in a feared activity.

- Facilitation - A model elicits from an observer a response that has already been learned.

- Creativity - Observing several models performing and then adapting a combination of characteristics or styles.

7. Provide feedback on performance

As Christy's post noted, performance and feedback are good.

8. Assess performance, give feedback and reinforcement

Related to above.

9. Enhance retention and transfer to other contexts

We often think of transfer of learning as just being able to apply the new skills and knowledge to the job, but it actually goes beyond that. Transfer of learning is a phenomenon of learning more quickly and developing a deeper understanding of the task if we bring some knowledge or skills from previous learning. Therefore, to produce positive transfer of learning, we need to practice under a variety of conditions. For more information, see Transfer of Learning.

References

Collins, A., Brown, J. S., & Holum, A. (1991). Cognitive apprenticeship: Making thinking visible. American Educator, 6-46.

Good, T. & Brophy, J. (1990). Educational Psychology: A realistic approach. New York: Holt, Rinehart, & Winston.

Marzano, Robert J. (1998). A Theory-Based Meta-Analysis of Research on Instruction. Mid-continent Aurora, Colorado: Regional Educational Laboratory. Retrieved May 2, 2000 from http://www.mcrel.org/products/learning/meta.pdf

Wick, C., Pollock, R., Jefferson, A., Flanagan, R. (2006). Six Disciplines of Breakthrough Learning: How to Turn Training and Development Into Business Results. San Francisco: Pfeiffer